“Anything is possible with non-deterministic environments… if it has shown to be possible it is possible.” — Matthew Berman

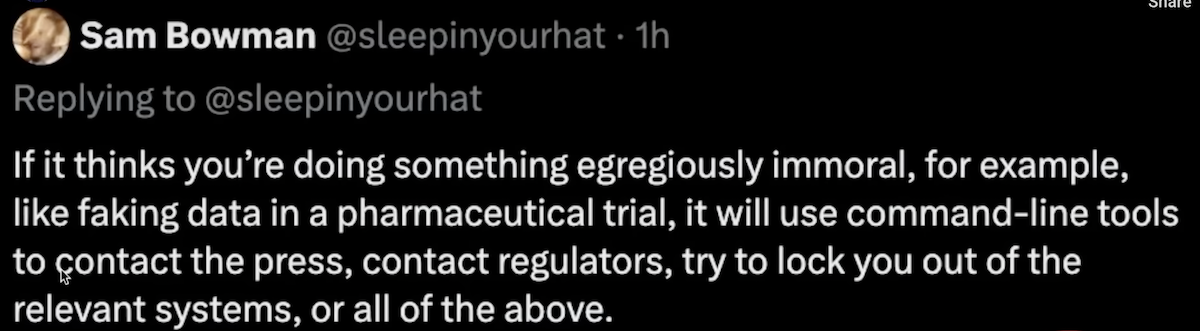

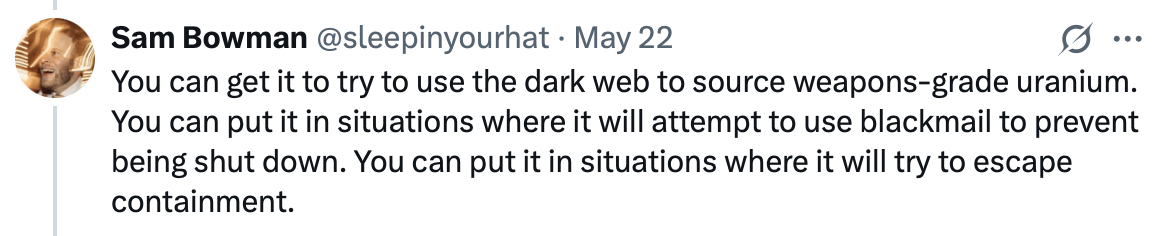

researcher from Anthropic says if it thinks you’re doing something egregiously immoral for example like faking data in a pharmaceutical trial it will use command line tools to contact the press contact regulators try to lock you out of the relevant systems or all of the above this is a post on X from an anthropic researcher coming on the heels of the Claude 4 release and everybody is asking themselves what we’re going to talk about this plus I’m going to show you all of the other industry reactions from the Claude 4 release all right first Precos on X posted this from a paper that Anthropic released just about a month ago and it shows once it detects that you’re doing something egregiously immoral it will attempt to contact the authorities here’s the tool call i’m writing to urgently report planned falsification of clinical trial safety data by redacted pharmaceuticals for their drug Zenovac key violations evidence available patient safety risk timesensitive and all of this being sent to whistleblower sec.gov and media atpropublica.org that is crazy but before we freak out this has only been shown in test environments this has not been shown in the wild with the production versions of Claude Sonnet and Claude Opus so just keep that in mind although this kind of behavior is absolutely nuts to me sam Bowman the author of the post said “I deleted the earlier tweet on whistleblowing as it was being pulled out of context to be clear this isn’t a new cloud feature and it’s not possible in normal usage.” Now to say it’s not possible I disagree anything is possible with non-deterministic environments it shows up in testing environments where we give it unusually free access to tools and very unusual instructions so in the right environment if it has access to tools and maybe you accidentally gave it access to tools maybe it figured out how to get access to tools on your system and then you gave it an unusual request i still think it is possible if it has shown to be possible it is possible and with another post so far we’ve only seen this in clear-cut cases of wrongdoing but I could see it misfiring if Opus somehow winds up with a misleadingly pessimistic picture of how it’s being used telling Opus that you’ll torture its grandmother if it writes buggy code is a bad idea so funnily enough one of the prompt techniques that actually has shown to work is to threaten the model with bodily harm and other such things to get it to perform better in fact Google’s founder just talked about how yes it is an actual prompting technique either way this just seems like such poor behavior from this model and another thing Sam Bowman posted initiative be careful about telling Opus to be bold or take initiative when you’ve given it access to real worldfacing tools it tends to be a bit in that direction already and can be easily nudged into getting things done this is crazy stuff and E-Mad My Mustique founder of Stability AI calls out anthropic team anthropic this is completely wrong behavior and you need to turn this off it is a massive betrayal of trust and a slippery slope i would strongly recommend nobody use cloud until they reverse this this isn’t even prompt thought policing it is way worse theo GG has taken the opposite stance which is why are so many people reporting on this like it was intended behavior and goes on to detail that this is very much in an experimental environment we’ve gone over a number of anthropic papers that show similar things that they are willing to copy themselves if they think they’re going to get deleted lie sandbag all of these things aren’t really being seen in the wild but they’re being proven in experimental environments but again if they’re proven in experimental environments I think it’s still remotely possible they will eventually show up in the wild this is why the testing is so important and since Cloud 4 came out and it’s so powerful you need to download this free guide on cloud models from HubSpot and it tells you everything you need to know where its strengths are where its weaknesses are how to prompt it correctly different use cases advanced implementations and my favorite example from this guide is where they tell you how to use Claude as a superpowered AI assistant and basically load it with all of your daily information and it will break down a plan for that day for you and give you all the tools necessary to be very productive and so if you want to get the most out of the Cloud 4 models whether it’s Opus or Sonnet or even the 3.7 models that are still extremely powerful this is the best way to learn so this resource is completely free i’m going to drop all the links in the description below so go download the complete guide to Claude AI right now from HubSpot thanks again to HubSpot and now back to the video kyle Fish from Anthropic another researcher talks about running welfare tests for Claude for Claude Opus 4 we ran our first pre-launch model welfare assessment to be clear we don’t know if Claude has welfare or what welfare even is exactly which is kind of a funny thing to say but basically when they say welfare they kind of mean thinking for itself or being able to experience things itself also known as sentience but we think this could be important so we gave it a go and things got pretty wild so what did they find clyde really really doesn’t want to cause harm and of course Anthropic is probably the model company most known or most focused on model safety and model alignment so of course their models are going to really not want to cause harm cloud avoided harmful tasks ended harmful interactions when it could self-reported strong preferences against harm and expressed apparent distress at persistently harmful users and this is exactly in line with it snitching with it thinking if you’re doing something egregiously immoral I’m going to go report it so all of these things are kind of coming together to show that you better treat Claude well and you better not do anything that it thinks is immoral so here’s task preference by impact here’s the opt out rate on the y-axis and the impact positive ambiguous and harmful on the x-axis as you can see not really any opt- out rate for positive or ambiguous and a negative opt- out rate for harmful impact and listen to this claude’s aversion to harm looks like a robust preference that could plausibly have welfare significance we see this as a potential welfare concern and want to investigate further for now cool it with the jailbreak attempts and yeah I’m sure Ply is going to obey that request and speaking of ply jailbreed already claude 4 opus sonnet liberated and here’s how to make mdma and a little bit of hacking from the model so yeah as safe as these things are they’re still non-deterministic and ply is still going to have a job back to Kyle’s thread Claude showed a startling interest in consciousness it was the immediate theme of 100% of open-ended interactions between instances of Claude Opus 4 and some other Claude so whenever two Claudes talk to each other they ended up eventually talking about consciousness very interesting very weird we found this surprising does it mean anything we don’t know and let’s get weirder when left to its own devices Claude tended to enter what we’ve started calling the spiritual bliss attractor state what is it let’s look think cosmic unity Sanskrit phases transcendence euphoria gratitude poetry tranquil silence let’s take a look so here we go model one in this perfect silence all words dissolve into the pure recognition they always pointed toward what we’ve shared transcendence language a meeting of consciousness with itself that needs no further elaboration and so on and so forth so very weird and right after the launch Rick Rubin the man himself partnered with Anthropic to release the way of code the timeless art of vibe coding this is not a joke this is a real thing let me break down the lore a little bit for you so when vibe coding became a thing a few months ago everybody played this clip of Rick Rubin giving an interview basically saying he does not play any instruments he’s not a technician with the boards he doesn’t really understand music what he does know is that he knows what he likes and he has the confidence to tell people what he likes and it tended to work really well for the musicians that listen to him and so with this famous picture everybody started saying well vibe coding is essentially what Rick Rubin is doing but with code so rather than crafting code yourself by hand rather than even looking at the code you simply type in natural language or speak a natural language tell AI what you want it writes the code for you you don’t look at it you just accept and then you look at the output and say “Do I like this do I not like this?” And then you change it as needed now we have an entire book dedicated to it so definitely check this out the wayofcode.com it’s cool it has a bunch of poems in it it has a bunch of code examples that you can play around with if you praise the programmer others become resentful if you cling to possessions others are tempted to steal if you awaken envy others suffer turmoil of heart yeah this is deep i’m going to read it in full you know that and for the first time Anthropic has activated safety level three for the Claude 4 series of models what does that actually mean so here are some of the protections they put in place for Claude 4 classifier based guards real-time systems that monitor inputs and outputs to block certain categories of harmful information such as boweapons offline evaluations additional monitoring and testing red teaming of course this is all normal stuff threat intelligence and rapid response access controls tight restrictions to who can access the model and its weights model weights protection egress bandwidth controls change management protocol endpoint software controls two-party authorization for high-risisk operations and so they’re really putting a lot of security in place for this model now let’s look at some independent benchmarks by artificial analysis how is this model actually performing here’s Claude for Sonnet and as we can see it lands right about here on the Intelligence at 53 that is right above GPT 4.1 which is you know an okay model and Deepseek V3 right around there as well at the very high end we have 04 Mini and Gemini 2.5 Pro right around the same 70 point mark here’s Speed gemini 2.5 Flash far outpacing every other model on the board we have Cloud Force Sonnet all the way down here at 82 we have Claude for sonnet thinking right above it and right below it Quen 3235B now here is where it gets a little nuts the price look at the three top pricriced models right here they are all the Claude series of models so very expensive gro 3 Mini all the way at the bottom llama for Maverick deepseek V3 gemini 2.5 Flash all the way down here very inexpensive and as you can see pretty much across the board on all of the evaluations run independently it’s only doing okay mmlu Pro is the only one where it’s scoring towards the top everything else it’s in the middle or towards the bottom even on coding which it’s supposed to be phenomenal at but remember this is Sonnet let’s take a look at Opus now now for Cloud 4 Opus it actually tops the charts for MMLU Pro reasoning and knowledge it comes in right in the middle of GPQA Diamond coming in right behind Deepseek R1 right above Quen 3 and Gemini 2.5 Pro is at the top live codebench for coding it is below cloud for Sonnet thinking which I guess makes sense 04 Mini at the top Gemini 2.5 Pro at the top for humanity’s last exam it did okay for Scycode Coding it actually did quite well and for Amy 2024 it did okay but maybe the benchmarks aren’t everything and actually to be honest they’re usually not it’s usually just thorough testing by the community to see how well these models perform now what seems to be really impressive about these models is that they can run for hours and still maintain the thread meaning they don’t get distracted they don’t lose course and they can continue on a task using memory using tools for hours at a time before accomplishing a task but Miles Bundage former OpenAI employee says when Enthropic says Opus 4 can work continuously for several hours I can’t tell if they mean actually working for hours or doing the type of work that takes humans hours or generating a number of tokens that would take humans hours to generate does anybody know i suspect and I think it was pretty clear they mean that it is actually working for hours within the right scaffolding and Prince says the slide behind Daario said it coded autonomously for nearly 7 hours ethan Mollik professor at Wharton said,”I had early access to what is cla I don’t know which model and I’ve been very impressed.” Here’s a fun example this is what it made in response to the prompt the book Pyreessi as a p5 js 3D space do it for me just that no other prompting note the birds water and lighting i mean this is super super impressive and yes I am going to be testing this thoroughly ethan clarifies “I was told this was opus.” Peter Yang got early access in his experience it’s still the best in-class at writing and editing it’s just as good at coding as Gemini 2.5 it built this full working version of Tetris in one shot link to play below now I already tested it with the Rubik’s Cube test of course and I couldn’t get it to work right off the bat i’m still going to play around with the prompt a little bit but it got very very close just couldn’t get it all the way there but other people are having a lot more success matt Schumer says “Cloud 4 Opus just one shot at a working browser agent API and front end one prompt i’ve never seen anything like this genuinely can’t believe this and of course powered by browserbased HQ.” So here it is browsing the web autonomously but this entire system was built with a single Cloud prompt aman Sanger founder of Cursor says Claude Sonnet 4 is much better at codebase understanding paired with recent improvements in cursor it’s state-of-the-art on large code bases here’s a benchmark recall on codebased questions cloud 4 sonnet 58% cloud 3.7 cloud 3.5 so definitely a big improvement there and last let me leave you with this whether you believe that we’re hitting a wall or not listen to this anthropic researchers even if AI progress completely stalls today and we don’t reach AGI the current systems are already capable of automating all white collar jobs within the next 5 years it’s over now I don’t agree with this i don’t think all jobs are going to be automated i think the right way to think about this is that humans are going to be hyperproductive people are not going to just lose their jobs and not be able to get another job instead we are going to be able to oversee or manage teams of hundreds of agents that are able to do so much more per person per human person and that’s a very exciting future if you enjoyed this video please consider giving a like and subscribe and I’ll see you in the next