First, do no harm.

1,500+ Posts…

Free knowledge sharing for Safe AI. Not for profit. Linkouts to sources provided. Ads are likely to appear on link-outs (zero benefit to this journal publisher)

OpenLetter. Disrupting the Deepfake Supply Chain. February 21, 2024.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. OpenLetter. Disrupting the Deepfake Supply Chain. February 21, 2024. Context: Many experts have warned that artificial intelligence (“AI”) could cause significant harm to humanity if not handled responsibly[1][2][3][4]. The impact of AI [...]

Uncontrollable: The Threat of Artificial Superintelligence and the Race to Save the World. 17 Nov. 2023. Amazon Books.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Uncontrollable: The Threat of Artificial Superintelligence and the Race to Save the World Paperback – 17 Nov. 2023 by Darren McKee (Author) 4.7 4.7 out of 5 stars 30 ratings #1 BEST [...]

OpenAI. Introducing Sora, our text-to-video model. Sora can generate videos up to a minute long while maintaining visual quality and adherence to the user’s prompt.

Wow. FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Sora by OpenAI We’re teaching AI to understand and simulate the physical world in motion, with the goal of training models that help people solve problems that [...]

CNBC. Richard Branson and Oppenheimer’s grandson urge action to stop AI and climate ‘catastrophe’. 15 FEB.

More precisely, "Safety Engineering" is the solution for Safe AI. FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. CNBC. Richard Branson and Oppenheimer’s grandson urge action to stop AI and climate ‘catastrophe’ Tegmark told CNBC in [...]

REPORT. 2023 STATE OF DEEPFAKES Realities, Threats, and Impact.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. REPORT. 2023 STATE OF DEEPFAKES Realities, Threats, and Impact. INFOGRAPHIC KEY FINDINGS 01. The total number of deepfake videos online in 2023 is 95,820, representing a 550% increase over 2019. 02. Deepfake [...]

The Elders and Future of Life Institute release open letter calling for long-view leadership on existential threats. 15 FEB.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. The Elders and Future of Life Institute release open letter calling for long-view leadership on existential threats. On 15 February 2024, The Elders and the Future of Life Institutereleased an open letter [...]

Sam Altman at World Government Summit. What keeps Altman up at night? “very subtle societal misalignments where we just have these systems out in society and through no particular ill intention um things just go horribly wrong”

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. WORLD GOVERNMENT SUMMIT: talk about about what is the most thing that you fear when it comes to um the deployment of AI and the most thing your opportune you're optimistic about. [...]

CNBC. Nvidia passes Alphabet in market cap and is now the third most valuable U.S. company. 14 FEB.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Nvidia passes Alphabet in market cap and is now the third most valuable U.S. company PUBLISHED WED, FEB 14 20245:33 PM EST Kif Leswing @KIFLESWING KEY POINTS The symbolic milestone is more [...]

World Governments Summit, Dubai. A Conversation with the Founder of NVIDIA: Who Will Shape the Future of AI? 12 FEB

A very important interview with Jensen Huang, CEO Nvidia. FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. World Governments Summit, Dubai. A Conversation with the Founder of NVIDIA: Who Will Shape the Future of AI? [...]

FMF Joins USAISI Consortium as a Founding Member. By: Frontier Model Forum. Posted on: 8th February 2024.

FMF Members: Anthropic, Google, Microsoft, OpenAI FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. FMF Joins USAISI Consortium as a Founding Member. By: Frontier Model Forum. Posted on: 8th February 2024. The Frontier Model Forum is [...]

GOV UK. Guidance. Implementing the UK’s AI regulatory principles: initial guidance for regulators. Voluntary guidance for regulators to support them to implement the UK’s pro-innovation AI regulatory principles. Published 6 February 2024.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Guidance Implementing the UK’s AI regulatory principles: initial guidance for regulators Voluntary guidance for regulators to support them to implement the UK’s pro-innovation AI regulatory principles. Documents Implementing the UK’s AI regulatory [...]

Geoffrey Hinton | Will digital intelligence replace biological intelligence? 2 FEB

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Geoffrey Hinton | Will digital intelligence replace biological intelligence? Geoffrey Hinton | Will digital intelligence replace biological intelligence? 02 February, 2024 72,997 views 2 Feb 2024 CONVOCATION [...]

Yuval Noah Harari. “Humans were always far better at inventing tools than using them wisely.”

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. "Humans were always far better at inventing tools than using them wisely." Yuval Noah Harari Is the huge positive potential of AI worth the risk of things going very wrong? Full [...]

Eric Topol. Ground Truths. Geoffrey Hinton: Large Language Models in Medicine. They Understand and Have Empathy. 30 JAN.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Eric Topol. Ground Truths. Geoffrey Hinton: Large Language Models in Medicine. They Understand and Have Empathy TOPOL. It's still an ongoing and intense debate and in some ways it really [...]

Artificial intelligence ‘godfather’ Yoshua Bengio opens up about his hopes and concerns. 29 JAN.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Artificial intelligence 'godfather' Yoshua Bengio opens up about his hopes and concerns. 29 JAN. "Living things all have an instinct to preserve themselves which is fine so long as they're not more [...]

Google News. About 71,100 results: “Taylor Swift deepfakes”. 27 JAN.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Google News. About 71,100 results: "Taylor Swift deepfakes". 27 JAN. Taylor Swift's AI pictures go viral BBC Taylor Swift deepfakes spark calls in Congress for new legislation US politicians have called for [...]

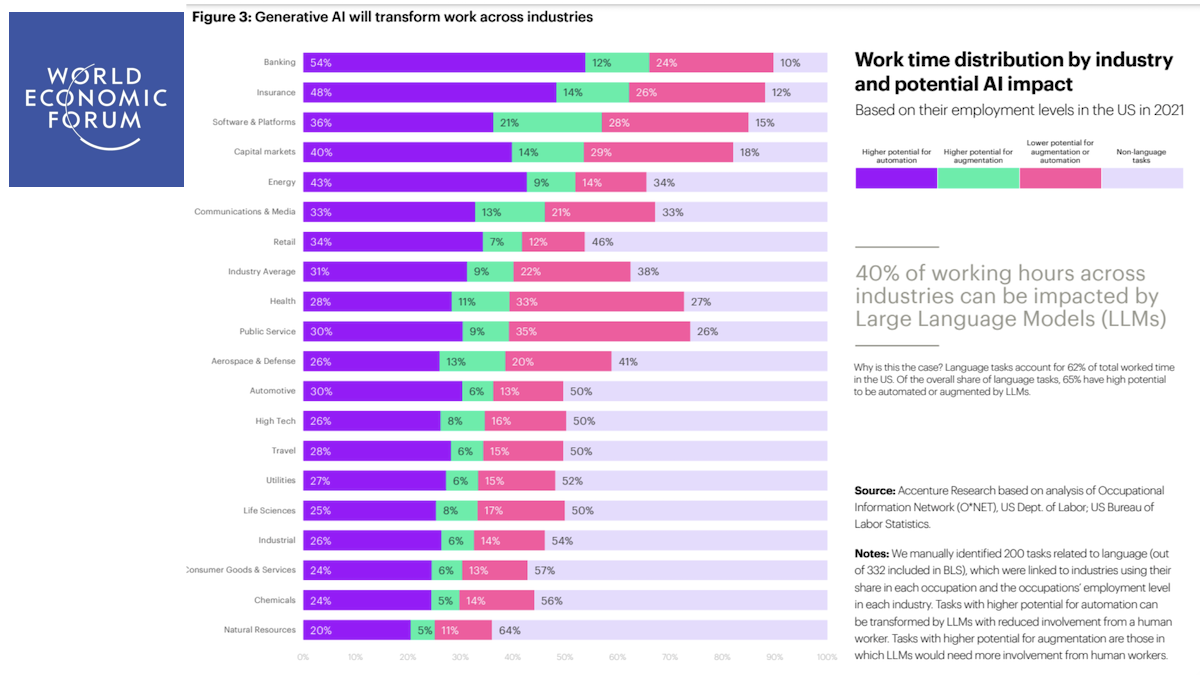

Jobs and AI. A curated list of how Jobs are expected to be affected by AI.

Exceptionally relevant data from highly respected sources! FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Curated list of how Jobs will be effected by AI: Elon Musk tells Rishi Sunak AI will put an end to work. [...]

PREDICTION [opinion]. Within 10 years 70-80% of current G20 manual & knowledge worker jobs will be: (A) manually automated by physical robots, or (B) digitally automated by AGI

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. PREDICTION: Within 10 years 70-80% of current G20 manual & knowledge worker jobs will be: (A) manually automated by physical robots, or (B) digitally automated by AGI Therefore, most humans of working [...]

FORBES. Half Of All Skills Will Be Outdated Within Two Years, [edX] Study Suggests. (2023)

A very good read from a respected source! FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. "The C-Suite executives surveyed estimate that nearly half (49%) of the skills that exist in their workforce today won’t be [...]

CONSUMER REPORTS. Who Shares Your Information with Facebook?

A very good read from a respected source! FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. CONSUMER REPORTS. Who Shares Your Information with Facebook? Consumer Reports and non-profit The Markup REPORTS that for the average single [...]

CNBC. DAVOS 2024. DAVOS WEF Saudi Arabia goes big in Davos as it looks to become a top AI tech hub for the Middle East PUBLISHED FRI, JAN 19 202411:20 AM EST thumbnail MacKenzie Sigalos

A very good read from a respected source! FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. CNBC. DAVOS 2024. DAVOS WEF Saudi Arabia goes big in Davos as it looks to become a top AI tech hub [...]

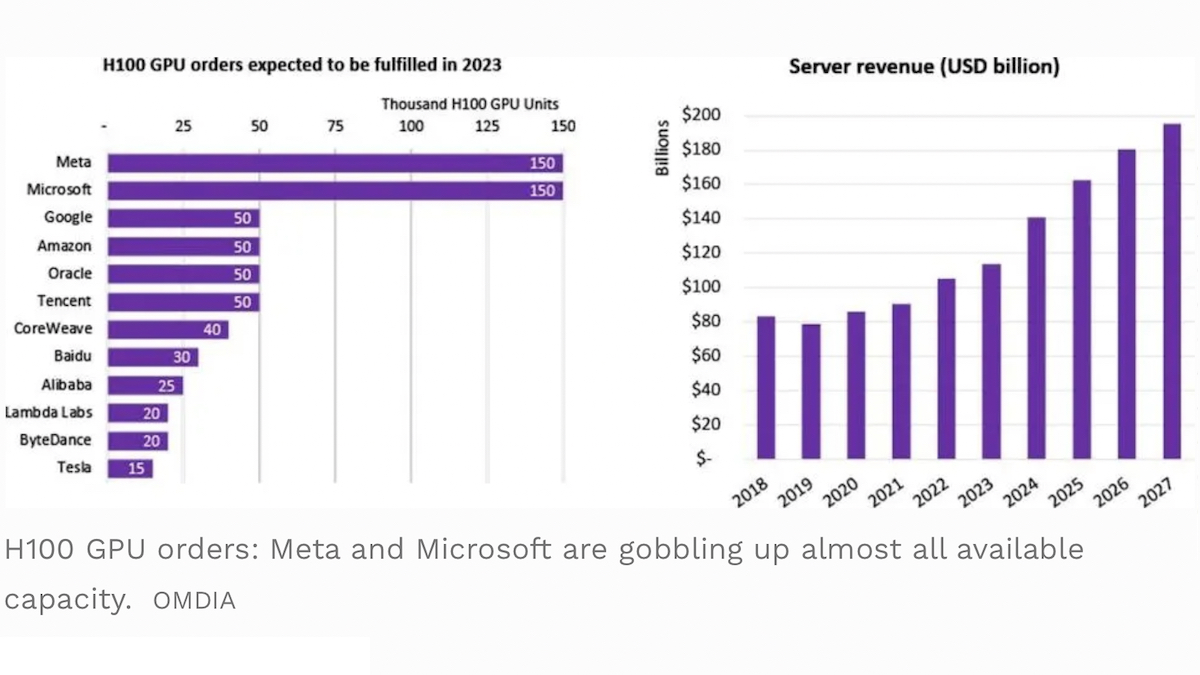

FORBES. Zuckerberg on AI: Meta Building AGI For Everyone And Open Sourcing It. 18 JAN.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. FORBES. Zuckerberg on AI: Meta Building AGI For Everyone And Open Sourcing It John Koetsier 18 January 2024 Meta CEO Mark Zuckerberg just announced on Threads that he’s focusing Meta on building [...]

WEF. DAVOS 2024. Chinese Premier Li Qiang called AI “a double-edged sword.” 17 JAN.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. WEF. DAVOS 2024. Chinese Premier Li Qiang called AI “a double-edged sword.” “Human beings must control the machines instead of having the machines control us,” he said in a [...]