First, do no harm.

1,500+ Posts…

Free knowledge sharing for Safe AI. Not for profit. Linkouts to sources provided. Ads are likely to appear on link-outs (zero benefit to this journal publisher)

NATURE. The world’s week on AI safety: powerful computing efforts launched to boost research UK and US governments establish efforts to democratize access to supercomputers that will aid studies on AI systems. 03 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. NATURE. The world’s week on AI safety: powerful computing efforts launched to boost research UK and US governments establish efforts to democratize access to supercomputers that will aid studies on AI systems. [...]

GOV UK. Policy paper. Introducing the AI Safety Institute. 02 November 2023

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. GOV UK. Policy paper. Introducing the AI Safety Institute. 02 November 2023 Presented to Parliament by the Secretary of State for Science, Innovation and Technology by Command of His Majesty. November 2023 [...]

GOV UK. Policy paper. Safety Testing: Chair’s Statement of Session Outcomes, 2 November 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. GOV UK. Policy paper Safety Testing: Chair's Statement of Session Outcomes 2 November 2023 Meeting at Bletchley Park today, 2 November 2023, and building on the Bletchley Declaration by Countries Attending the [...]

GOV UK. Press release. Technology Secretary announces investment boost making British AI supercomputing 30 times more powerful. British supercomputing to be boosted 30-fold with a new Cambridge computer and Bristol site. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. GOV UK. Press release. Technology Secretary announces investment boost making British AI supercomputing 30 times more powerful. British supercomputing to be boosted 30-fold with a new Cambridge computer and Bristol site. From: [...]

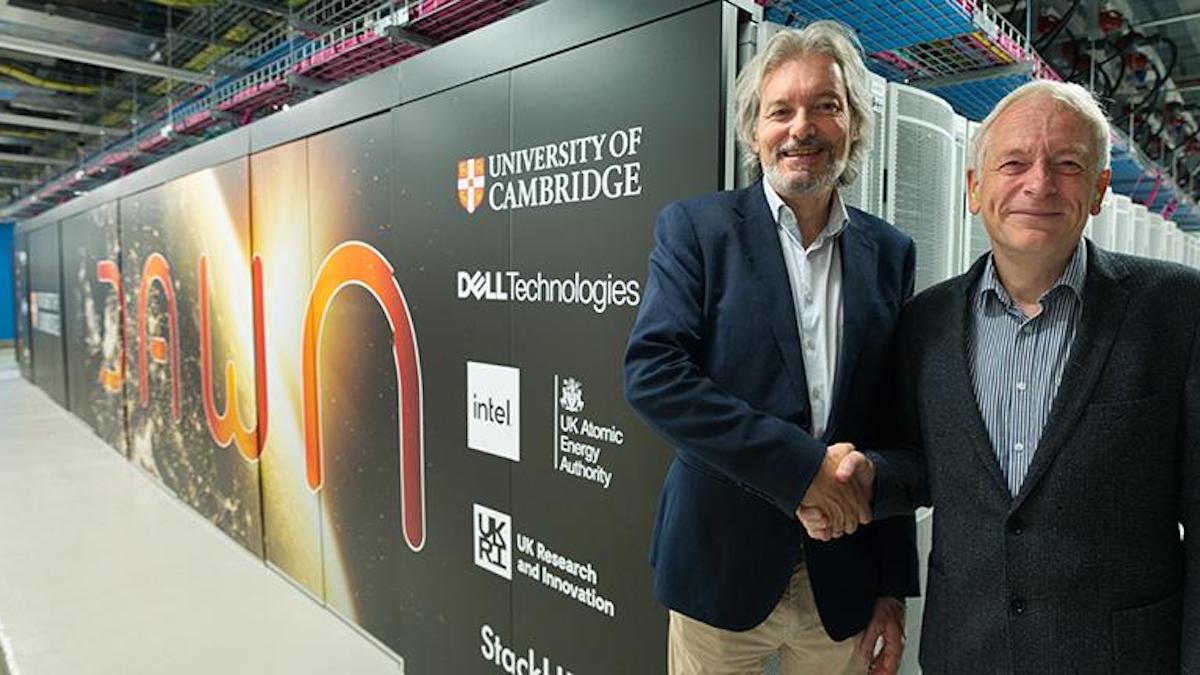

UNIV. CAMBRIDGE NEWS. Cambridge, Intel and Dell join forces on UK’s fastest AI supercomputer. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. UNIV. CAMBRIDGE NEWS. Cambridge, Intel and Dell join forces on UK’s fastest AI supercomputer. 01 NOV 2023. The Cambridge Open Zettascale Lab is hosting Dawn, the UK’s fastest artificial intelligence (AI) supercomputer, [...]

CNBC. UK invests $273 million in AI supercomputer as it seeks to compete with U.S., China. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. CNBC. UK invests $273 million in AI supercomputer as it seeks to compete with U.S., China. 01 NOV 2023. KEY POINTS The U.K. government said Wednesday that it will invest £225 million, [...]

THE GUARDIAN. Ilya: the AI scientist shaping the world.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. "We definitely will be able to create completely autonomous beings with their own goals. And it will be very important, especially as these beings become much smarter than humans, it's going to [...]

No Priors Ep. 39 | With OpenAI Co-Founder & Chief Scientist Ilya Sutskever. 02 NOV.

78,534 views 2 Nov 2023 Each iteration of ChatGPT has demonstrated remarkable step function capabilities. But what’s next? Ilya Sutskever, Co-Founder & Chief Scientist at OpenAI, joins Sarah Guo and Elad Gil to discuss the origins of OpenAI as a capped profit company, early emergent behaviors of GPT [...]

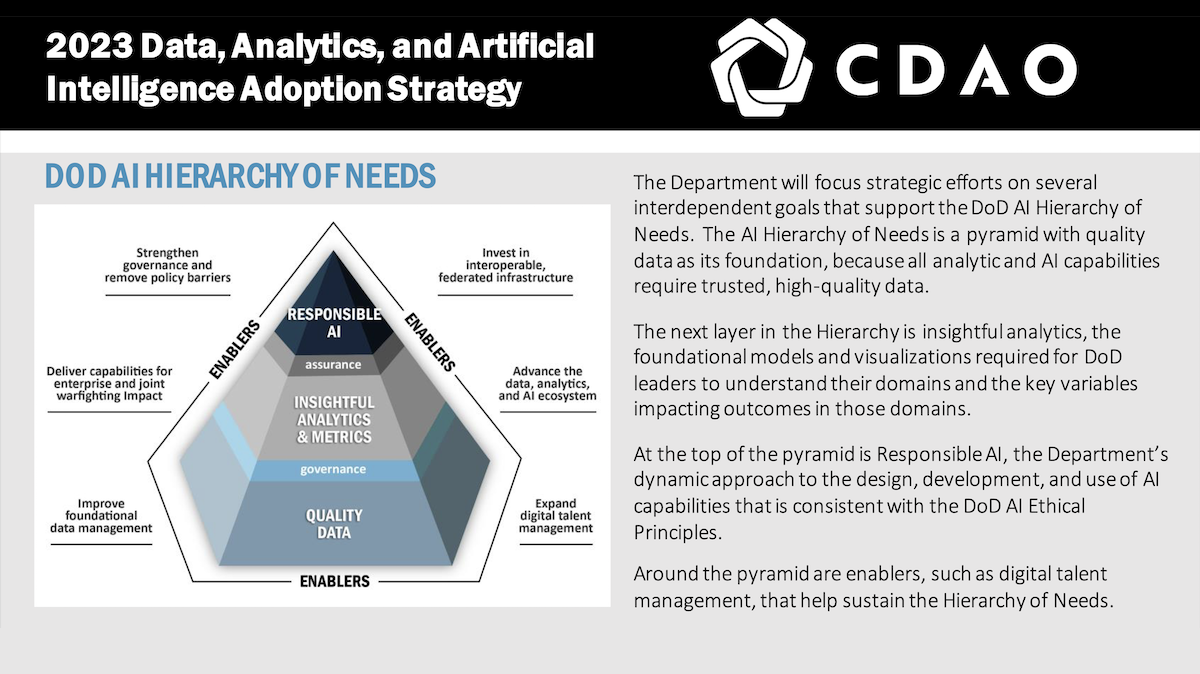

U.S. DEPARTMENT OF DEFENSE (DoD). Data, Analytics, and Artificial Intelligence Adoption Strategy Accelerating Decision Advantage. 02 NOV.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. U.S. DEPARTMENT OF DEFENSE (DoD) Data, Analytics, and Artificial Intelligence Adoption Strategy Accelerating Decision Advantage. FACT SHEET by CHIEF DIGITAL AND ARTIFICIAL INTELLIGENCE OFFICE (CDAO) [...]

GOV UK. Press release. Prime Minister launches new AI Safety Institute. World’s first AI Safety Institute launched in UK, tasked with testing the safety of emerging types of AI. 02 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. GOV UK. Press release. Prime Minister launches new AI Safety Institute. World's first AI Safety Institute launched in UK, tasked with testing the safety of emerging types of AI. From:Prime Minister's Office, [...]

THE WASHINGTON POST | AP. Countries at a UK summit pledge to tackle AI’s potentially ‘catastrophic’ risks. November 1, 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Countries at a UK summit pledge to tackle AI's potentially 'catastrophic' risks By Kelvin Chan and Jill Lawless | AP November 1, 2023 BLETCHLEY PARK, England — Delegates from 28 nations, including the U.S. [...]

THE NEW YORK TIMES. Global Leaders Warn A.I. Could Cause ‘Catastrophic’ Harm At a U.K. summit, 28 governments, including China and the U.S., signed a declaration agreeing to cooperate on evaluating the risks of artificial intelligence. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. THE NEW YORK TIMES. Global Leaders Warn A.I. Could Cause ‘Catastrophic’ Harm At a U.K. summit, 28 governments, including China and the U.S., signed a declaration agreeing to cooperate on evaluating the [...]

GOV UK. Press release. Nations and AI experts convene for day one of first global AI Safety Summit Leading AI nations, organisations and experts meet at Bletchley Park today to discuss the global future of AI and work towards a shared understanding of risks. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. GOV UK. Press release. Nations and AI experts convene for day one of first global AI Safety Summit Leading AI nations, organisations and experts meet at Bletchley Park today to discuss the [...]

GOV UK. Policy paper. The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Policy paper. The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023 Published 1 November 2023. GOV UK Artificial Intelligence (AI) presents enormous global opportunities: it has the potential [...]

AI Summit at Bletchley Park, UK. Elon Musk: ‘AI is one of the the biggest threats to humanity’. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. AI Summit at Bletchley Park, UK. Elon Musk: 'AI is one of the the biggest threats to humanity'. 01 NOV 2023. “We have for the first time the situation where we [...]

NVIDIA Omniverse™ Overview Unify Your 3D Work With Omniverse NVIDIA Omniverse™ is a computing platform that enables individuals and teams to develop Universal Scene Description (OpenUSD)-based 3D workflows and applications. Creators Create in 3D Faster Than Ever Sync your favorite creative apps to Omniverse and USD and work with your 3D [...]

GOV UK. Guidance AI Safety Summit: confirmed attendees (governments and organisations) Updated 31 October 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. GOV UK. Guidance. AI Safety Summit: confirmed attendees (governments and organisations). Updated 31 October 2023 Academia and civil society Ada Lovelace Institute Advanced Research and Invention Agency African Commission on Human and [...]

BBC NEWS. AI: Scientists excited by tool that grades severity of rare cancer. 01 NOV 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Excellent example of the benefits of narrow AI applications. 2 min read. BBC NEWS. AI: Scientists excited by tool that grades severity of rare cancer. 01 NOV 2023. By Fergus Walsh [...]

FLI recommendations for the UK Global AI Safety Summit Bletchley Park, 1-2 November 2023

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. An excellent policy document. Highly recommended! FLI recommendations for the UK Global AI Safety Summit Bletchley Park, 1-2 November 2023 “The time for saying that this is just pure research has long [...]

Existential Risk Observatory. AI Summit Talks featuring Professor Stuart Russell. 31 OCT 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Existential Risk Observatory. AI Summit Talks featuring Professor Stuart Russell. 31 OCT 2023. What if we succeed? Lift the living standards of everyone on Earth to a respectable level 10x increase in [...]

THE WHITE HOUSE. FACT SHEET: President Biden Issues Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence. OCTOBER 30, 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. THE WHITE HOUSE. FACT SHEET: President Biden Issues Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence. OCTOBER 30, 2023. Today, President Biden is issuing a landmark Executive Order to ensure that [...]

BBC NEWS. US announces ‘strongest global action yet’ on AI safety. 30 OCT 2023.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. BBC NEWS. US announces 'strongest global action yet' on AI safety. 30 OCT 2023. By Shiona McCallum & Zoe Kleinman, Technology team The White House has announced what it is calling "the [...]

WANTED: Provably Safe AI for The Benefit of People, Forever.

FOR EDUCATIONAL AND KNOWLEDGE SHARING PURPOSES ONLY. NOT-FOR-PROFIT. SEE COPYRIGHT DISCLAIMER. Learn about p(doom) and... How to increase our probability of survival? Demand mathematically provable Safe AI. Take a tea/coffee/water and 5 minutes to understand (download pdf for convenience) [...]