“Artificial intelligence could be a real danger in the not-too-distant future. It could design improvements to itself and out-smart us all.” (Stephen Hawking, 2014)

Why my p(stop/doom) = 99%

BECAUSE:

FACT: Mutualistic Symbiosis is a very successful biological strategy.

IF the Corals can do it with algae for 210 million years…

THEN Homo sapiens can do it with ASI for 1,000 years (a good start)

p(doom) = the probability of the doom of humanity by Artificial Super Intelligent (ASI) technology.

p(doom) Scientific opinions range from 0.00001% to 99.99999% with a relatively high average of 10 to 20% probability of human extinction from ASI.

The truth is:

- Nobody knows what will happen in the future with ASI.

- Nobody currently understands how, or why, Large Language Models (LLMs) like ChatGPT actually work.

- IF the industry continues on it’s current trajectory (with Zero safety) then p(doom) is certainly 10 or 20% or more…

But, the fact is:

- Corals and algae have an IQ of exactly 0, but they have survived together with mutualistic symbiosis for 210 million years.

- Homo sapiens have evolved and survived largely because of: (A) intelligence and (B) the ability to cooperate in large groups.

- Given functional cooperation, the collective intelligence of Homo sapiens is vast- and soon to become exponentially greater with AI.

- Homo sapiens can probably engineer SafeAI, if we cooperate effectively, with a high probability of success. (Hence humble opinion: 99.99%)

CONTAINMENT is THE REQUIREMENT.

CONTAINMENT is POSSIBLE.

ASI can certainly not violate the LAWS of PYSICS.

FUNCTIONAL human societies create and enforce SOCIAL LAWS.

IF global cooperation, THEN humans can survive the 21st Century.

Learn more:

- p(doom)

- When corals met algae: Symbiotic relationship crucial to reef survival dates to the Triassic – Princeton University

- Leakproofing the Singularity. Artificial Intelligence Confinement Problem | Roman V. Yampolskiy (2011)

- TED. What happens when our computers get smarter than we are? | Nick Bostrom (2015)

- Guidelines for Artificial Intelligence Containment | Babcock, Kramer, Yampolskiy (2017)

- AI: Unexplainable, Unpredictable, Uncontrollable. | Yampolskiy (2024)

- For Humanity Podcast

- Future of Life Institute; Artificial Intelligence

- NIST U.S. ARTIFICIAL INTELLIGENCE SAFETY INSTITUTE

- Discover SafeAI Forever and the Human Message to the Machine: SafeAI Forever.

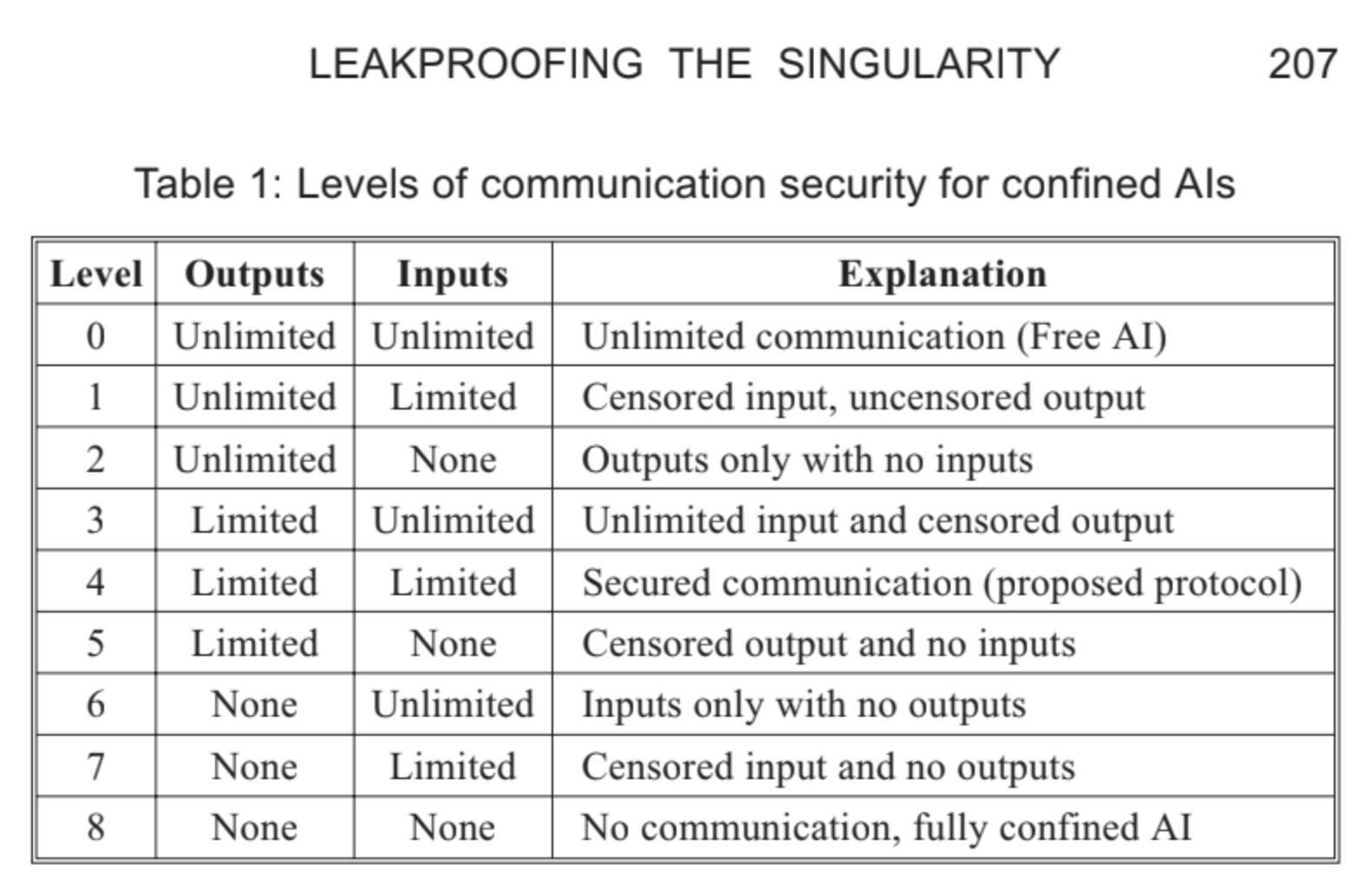

Given the fact that LLM “jailbreaks” are commonly understood and openly published, the current industry AI Safety Level = 0

HINT: for a reasonably safe future, we require Level 4, with mathematically provable containment.

Source: Leakproofing the Singularity. Artificial Intelligence Confinement Problem | Roman V. Yampolskiy (2011)