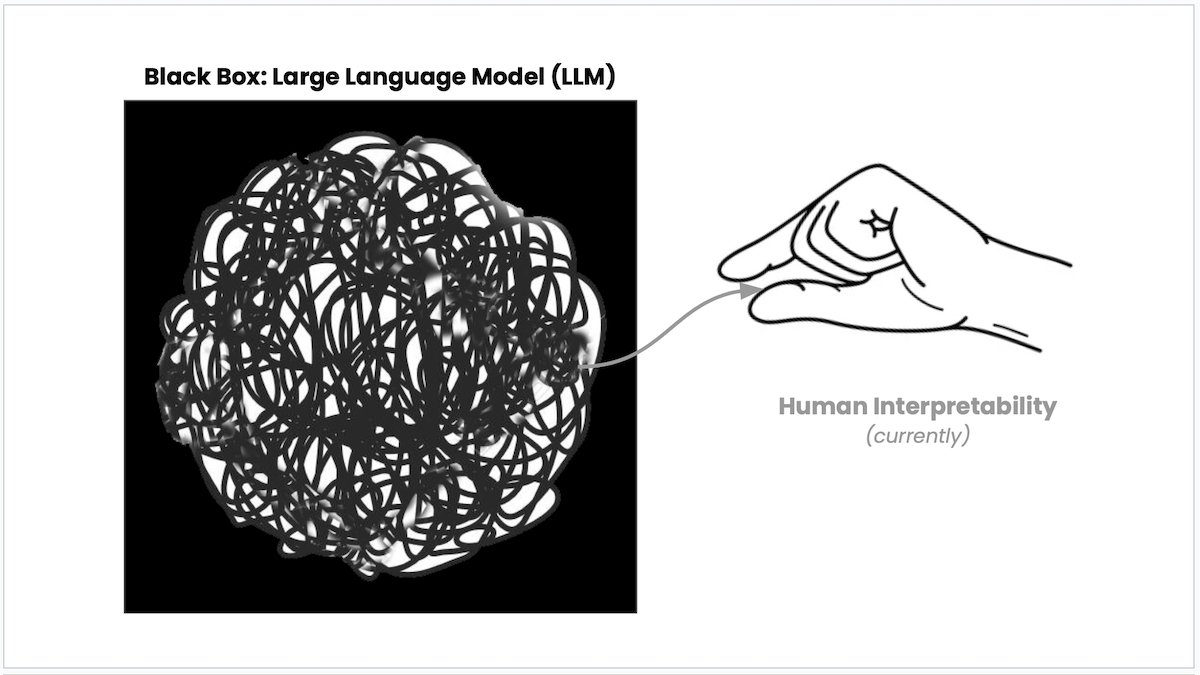

AI scientists currently have no idea how Large Language Models (LLMs) actually work on the inside. They are a “black-box” technology.

“I think we have a lot of evidence that actual reasoning is occurring in these models and that like they’re not just stochastic parrots” — Sholto Douglas, Google Deepmind

.@TrentonBricken explains how we know LLMs are actually generalizing – aka they’re not just stochastic parrots:

– Training models on code makes them better at reasoning in language.

– Models fine tuned on math problems become better at entity detection.

– We can just… pic.twitter.com/1PfAIMyXsa

— Dwarkesh Patel (@dwarkesh_sp) March 27, 2024

“Human level AI is deep deep into an intelligence explosion. The intelligence explosion has to start with something weaker than that.”

I don’t know if like there’s this circuit that’s weak and getting stronger I don’t know if it’s something that works but not very well like I I think we don’t know and these are some of the questions we’re trying to answer with mechanistic interpretability if you had to put it in terms of human psychology what is the change that is happening are we creating new drives new goals new thoughts how is the model changing in terms of psychology and I think all those terms are kind of like inadequate for you know describing what’s it’s not clear how you they are as abstractions for humans either I think we don’t have the language to describe what’s going on I’d love to look inside and say and and kind of actually know what we’re talking about instead of you know like basically making up words which is what which is what I do what what you’re doing and asking this question um where where you know we should we should just be honest we have we really have very little idea what we’re what we’re talking about so you know it would be great to say well what we actually mean by that is you know this circuit within here turns you know turns on and you know in and you know after we’ve trained the model then you know this circuit is no longer operative or weaker and that you it would love to be able to say again we’re it’s going to take a lot of work to be able to do that mechanistically what is alignment is it that you’re locking in the model into a benevolent Character Are You disabling deceptive circuits and procedures like what concretely is happening when you align a model I think as with most things you know when we actually train a model to be aligned we don’t know what happens inside the model right there are different ways of training it to be align but I think we don’t really know what happens I mean I think for some of the current methods I think all the current methods that involve some kind of fine-tuning of course have the property that the underlying knowledge and abilities that we might be worried about don’t don’t disappear it’s just you know the model is just taught not to Output them I don’t know if that’s a fatal flaw or if you know or if that’s just the way things have to be I think this problem would be much easier if you had an oracle that that could just scan a model and say like okay I know this model is aligned I know what it’ll do in every situation um then the problem would be much easier and I think the closest thing we have to that is something like mechanistic interpretability it’s not anywhere near up to the task yet but mechanistic interpretability is the only thing that even in principle is the thing where it’s like it’s more like an x-ray of the model than modification of the model right it’s more like an assessment than an intervention I don’t know what’s going on inside mechanistically and I think that’s the whole point of mechanistic interpretability to really understand what’s going on inside the models at the level of individual circuits I think what we’re hoping for in the end is not that we’ll understand every detail but again I would give like the X-ray or the mrri analogy that like we can be in a position where we can look at the broad features of the model and say like is this a model whose internal state in plans are very different from what it externally represents itself to do right is this a model where we’re uncomfortable that you know far too much of its computational power is uh you know is it devoted to doing what look like fairly destructive and manipulative things and I give an analogy like to humans so uh it’s actually possible um to you know to look at an MRI of someone um and predict above random chance whether they’re a psychopath um there was actually a story a few years back about a neuroscientist who was studying this and he looked at his own scan and discovered that he was a psychopath and then everyone everyone in his life was like no no no that’s this just obvious like you’re you’re a complete like you must be a psychopath um and and he was tot totally unaware of this the basic idea that um you know that that there there can be these macro features that like like psychopath is probably a good analogy for it right they’re like you know this is what we’d be afraid of model that’s kind of like Charming on the surface very goal oriented and you know very dark on the inside do you think think that cloud has conscious experience How likely do you think that is this is another of these questions that just seems very unsettled and uncertain conscious is again one of these words that I I suspect it will like not end up having a a well-defined mean something to be but that yeah but but that yeah well I I suspect that’s that’s a that’s a spectrum right uh so I don’t know if we if we if we discover like that you know that I should care about Claude let’s say we discover that I should care about claude’s experience as much as I should care about like a dog or a monkey or something yeah I I would be I would be kind of kind of worried uh I don’t know if their experience is positive or negative unsettlingly I also don’t know like I wouldn’t know if any intervention that we made was more likely to make Claude you know have a positive versus negative experience versus not having one if there’s an area that is helpful with this it’s maybe mechanistic interpretability because I think of it as Neuroscience for models and so it’s possible that we could we could shed some shed some light on this although you know it’s not it’s not a straightforward factual question.